The first video olved stery! In this one we look at an FCI node that will not come online after patching.

Issue Introduction

This was an issue I almost forgot about, during the patching of an FCI to a new CU, the inactive server patches without an issue – all is well. Upon attempting a failover to patch the last (and currently active) instance, however, fails to failover. The instance just sits in online pending and won’t come online, fails, and goes back to the original server which is not yet patched. This *only* happens during a patch. Does this mean a SQL patch from Microsoft is bad (I mean, Windows Updates jokes aside) as there have been a few in the past…

Questions That Need Answered

1. Why won’t the instance fail over to the newly patched server?

2. Why is it when the patch is removed the failover occurs without issue?

3. Is this a bad patch from Microsoft?

Initial Data Collection

When working with a SQL Server FCI, the first three most important items to gather when there is an issue is the cluster log for each node, the SQL Server errorlog, and the Windows event log. Each of these logs gives you a different view or perspective into any potential issues, and in this particular situation since the instance fails to come online, we’re going to need the cluster and SQL logs at a minimum. Gathering the cluster logs is extremely trivial since Windows Server 2012 as there is a wonderful powershell cmdlet (which can be installed on any administrators computer but installing the Windows Server RSAT tools) called Get-ClusterLog which has a few different switches and options. My personal favorites are to use -Destination so that we control where the files go, and -UseLocalTime which can help when the issue has occurred and you know the time which coincides with the same time zone as is on the server, negating the need to do UTC conversion.

Checking The Collected Data

Looking into the cluster log, there are two main items that stand out:

1. Failed to retrieve the ftdata root registry value, last error = 0

2. ReAclDirectory Failed to apply security to S:\MSSQL16.MSSQLSERVER\MSSQL\Data (87)

Ok, sure. The first item is talking about a registry directory and given an error value of 0 which normally means success (typical hresult success is error_success which is 0) and this makes me a bit suspect that it’s the actual issue. The second item is a failure to properly apply ACLs to the Data directory of the instance and has an error in the parenthesis (87). Looking up the error code of 87 turns out to be, “The Parameter is Incorrect”, which is less than helpful.

One of the ways to investigate these potential issues is to use a tool such as ProcMon since it installs a kernel driver which will gather different points of data from the kernel level and thus will get more system wide and deep information than just doing some basic auditing in Windows. Unfortunately since we only have the data that we currently have, a reproduction of the issue and data capture is really the next step. The logs can only tell us so much based on what is logged… and there’s the whole am I logging too much or too little discussion where logging everything just isn’t feasible… in fact, logging _everything_ not only turns into a data storage nightmare, but imagine the amount of “errors” that occur which are _perfectly normal_ on a running system. Now imagine seeing those all over the place and having to go investigate to see whether that’s normal or not, almost every time. No thanks, hard pass.

Gathering And Checking Data During The Issue

The next step was to gather a procmon capture while the instance was trying to start up on the newly patched (with no errors in patching) system. The big takeaway here is that we had an error pointing to the registry and one pointing to a directory, both of which procmon will capture.

After reproducing the issue and saving the procmon capture, we can look at the results. Checking the potential registry issue first we find that there just isn’t anything. There’s no ftdata registry check at all, which kind of makes sense since the error was 0, which typically means success. This could be that the call to do that didn’t run, but also that the GetLastError for the thread returned that there wasn’t any error… which would mean that the issue happened somewhere else and cascaded to this call (potentially, just conjecture).

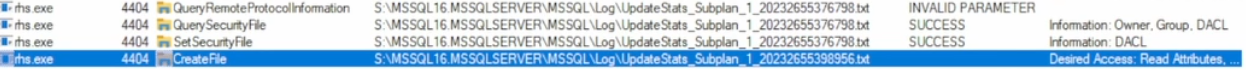

The second issue was about ACLing the Data directory, so let’s reset our filters and see what that shows. Remember that the error was “the parameter is incorrect”, whatever that means in this context. Setting our filter up to look for the “Data” directory paths, we do see that there are some “INVALID PARAMETER” results:

However, the operation was QueryRemoteProtocolInformation which doesn’t seem to be applicable here, looking at the documentation for the usage. If we compare this to a working example, the same items occur, this is doubtfully the issue. So now what? Well, let’s see if anything was actually having ACLs set to it, so let’s create another filter and see what we find:

Alright so we are successfully writing security information to files in these folders, do we get any errors?

Nope, sure don’t. So that’s extremely odd, we get no errors but then are told that we get an error that the parameter is incorrect. Let’s scroll through the results and see if anything pokes its head or gives us a lead. Scrolling through you’ll notice there are many thousands of files having their security options written to, in fact 148,000+ files. That’s… a lot of files, too many it seems. Why would there be so many files?

All of these files seem to be some sort of maintenance log output from SQL Server maintenance place executions. There’s a lot of them but they don’t seem to be hurting anything, everything succeeds. Let’s scroll to the end and see what’s happening, maybe it’ll shed some light.

All of these files seem to be some sort of maintenance log output from SQL Server maintenance place executions. There’s a lot of them but they don’t seem to be hurting anything, everything succeeds. Let’s scroll to the end and see what’s happening, maybe it’ll shed some light.

It just stops writing to files, in fact it stops in the middle of a create file call. Taking a look at that directory there are thousands of these files, in fact it takes explorer quite some time to even visually load the directory, let alone scroll through it. I think we’re on to something here… let’s see how long, according to our procmon capture, we were spending doing all of this work. Taking a swag at the start and stop times it ends up being about 3 minutes. In technology I have rarely found that computers, on their own, like to error on perfect intervals. Sure, 2.5345 second later sounds fine but it’s really only humans that tend to make thing happen at 1 minute, 2 minutes, 5 minutes, etc., so there’s a good chance that this just stopped running after it hit some type of timeout interval. Now this leads me to believe that the number of files in this folder is definitely an issue, but it also makes you wonder why it had an issue after 3 minutes.

Possible Mitigations and Results

The thing that stands out the most is to remove all the junk log files from the maintenance plans and then edit the executions to not produce these files. I don’t know anyone that looks at them, let alone archives or otherwise ingests them in any meaningful way. In fact, removing all of these files does end up allowing the instance to properly come online, and it does this quite quickly. Why would having a bunch of files, though, cause the SQL Instance to not start up after patching… after all, when the patch was removed, the instance would start up with no issues.

This is where if we look at who was doing this work, we’ll get an idea where to look to answer this. The process doing this is RHS which is part of the cluster responsible for loading and executing the resource specific implementation dll. In this case it’s sqlsrvres.dll since it’s an FCI and if we use that information to look at the resource, we’ll see there’s a pending timeout setting which is set to (dramatic music) 180 seconds, otherwise properly known as 3 minutes. If the resource doesn’t come online in that time the cluster will take corrective action which in this case was to stop it and try to bring it online somewhere else (which succeeded) so that the cluster can maintain availability, which is why you’re using clustering in the first place (I would assume).

Bringing It All Together and Answering Questions

1. Why won’t the instance fail over to the newly patched server?

A: The number of files that needed to have security descriptors written was very high with the average amount of time to do said individual operation on each file being enough that the pending timeout hit without getting through all of the files. This happens by default when an instance is patched so that the security is reset in case any items or changes have been made and is a normal part of patching.

2. Why is it when the patch is removed the failover occurs without issue?

A: Removing the patch removes the specific control structures that tell the resource dll to ACL the directories and contained files, thus removing the patch caused this not to happen when startup occurred.

3. Is this a bad patch from Microsoft?

A: No, it’s not, the patch is perfectly fine.

Closing Thoughts

There’s this immediate reaction to assume the thing that fails is the cause of the failure. It’s natural and I understand, however when working in technology it’s rarely that easy. Sure sometimes it’s quite straightforward but many if not most of the time it’s really not and there are a variety of factors and items that all contribute to the issue wherein changing a single item, even though many others are still horrible or incorrect, can “stop” the issue from occurring. It doesn’t mean the issue is gone, it’s just another ticking clock waiting to sound the alarm. We should take care to actually look into issues and understand them, which I’m finding is extremely uncommon in today’s world where the impetus on consuming services from others (cough, cloud, cough) and expecting the others to troubleshoot and and understand everything bottom to top with extremely short investigation times is more of the expectation. Sadly, when these types of things happen (and they happen all the time) there’s rarely anyone left with the knowledge or ability to troubleshoot and I’ve found this to be a key differentiator between places that are able to deal with issues in a timely and responsive manner and places that just blame everyone else and never get any better, ultimately ended up churning through time and money to “move” to something else rather than fix the underlying issue (staff knowledge or expectations of providers).

That was a great walkthrough using procmon to troubleshoot the issue thanks Sean!